Message boards : Number crunching : long running 290px..-Noelia_KLEBE WUs

| Author | Message |

|---|---|

|

I have received a bunch of 290xp...Noelia_KLEBEs. They will be lucky to finish within 24 hours (660 and 660ti) or 2 days (560ti with the additional penalty for only 1GB memory). Are these cards now too slow to process long WUs, or is there a problem with them that they run so much longer than earlier WUS? | |

| ID: 31836 | Rating: 0 | rate:

| |

I have received a bunch of 290xp...Noelia_KLEBEs. They will be lucky to finish within 24 hours (660 and 660ti) or 2 days (560ti with the additional penalty for only 1GB memory). I've received a couple of them too. They need about 1.2GB GPU memory. Are these cards now too slow to process long WUs, These cards are not too slow to prcess them, as the deadline is 5 days. However these cards too slow to achieve the 24h or 48h return bonus. ... or is there a problem with them that they run so much longer than earlier WUS? I had a problem with one of them, the GPU usage was 9-11% only, with some spikes of 95%. I've resarted the host, and the symptom disappeared for as long as I've watched. | |

| ID: 31840 | Rating: 0 | rate:

| |

|

On my GTX660Ti and GTX660 the last NOELIA_KLEBE WU's finished just under 11h and 14h: | |

| ID: 31841 | Rating: 0 | rate:

| |

|

Previous Noelia KLEBEs have been ok - just this batch starting with 290px. Anyway the one on the 560ti just crsashed after 20 hours after I had to reboot the PC so I'll give it a rest from long WUs for the moment and see if it handles the new Folding WUs any better. | |

| ID: 31844 | Rating: 0 | rate:

| |

|

I just finished a NOELIA 290px86 on my GTX 660. It took 19h40m, exactly six hours longer than the previous NOELIA 148px111 and for the same credit; 142,800. :( | |

| ID: 31846 | Rating: 0 | rate:

| |

I have received a bunch of 290xp...Noelia_KLEBEs. They will be lucky to finish within 24 hours (660 and 660ti) or 2 days (560ti with the additional penalty for only 1GB memory). Just found 2 of them on my 650 Ti 1GB cards, 19-20 hours run and less than 40% completed. Noelia strikes again :-( | |

| ID: 31851 | Rating: 0 | rate:

| |

|

I'm not keen on 20h tasks, unless the failure rate is very low. Of late I've mostly been running short WU's anyway. | |

| ID: 31886 | Rating: 0 | rate:

| |

|

Current task 284px89-NOELIA_KLEBE-0-3-RND9863 has time 6570s at 10% progress! | |

| ID: 31893 | Rating: 0 | rate:

| |

|

I just lost two more long run Noelia tasks....only a few seconds lost, but I have gone back to short run tasks again. | |

| ID: 31900 | Rating: 0 | rate:

| |

|

I ran into a few of these slow units myself. Though they were not the 20+ hours finish time units, but they did take 14 to 16 hours to finish. | |

| ID: 31903 | Rating: 0 | rate:

| |

|

I too have significant problems with 063px85-NOELIA_KLEBE-1-3-RND2279. It looks like it hangs up the driver: when this task is started, it never makes it onto the GPU and the task hangs at 0% (though it appears to be running in BOINC). The GPU is not capable of running any openCL tasks after that, not even very simple test programs. Only a reboot will get the system out of this state. I have now suspended GPUGRID and the GPU is running an Einstein task just fine. So the OS and the hardware are OK. This is a linux 64-bit system with a 310.44 driver. My card is a GTX 660 Ti with 2 GiB memory, so overrunning the memory should not be an issue. I will kill the NOELIA task and see if I can get something that runs. I have to say that I am getting pretty fed up with these NOELIA tasks, they cause way too many problems. The other ones all run just fine, but it appears that the NOELIA tasks are the only ones that break... | |

| ID: 31904 | Rating: 0 | rate:

| |

|

I downloaded a few more of these units, and while the 100 series px... seem to be running normally (9 to 12 hours finishing time for my computers). It is the 200 series px... that are the slow ones (14+ hours finishing time for my computers). | |

| ID: 31907 | Rating: 0 | rate:

| |

|

Hi, Bedrich: | |

| ID: 31908 | Rating: 0 | rate:

| |

|

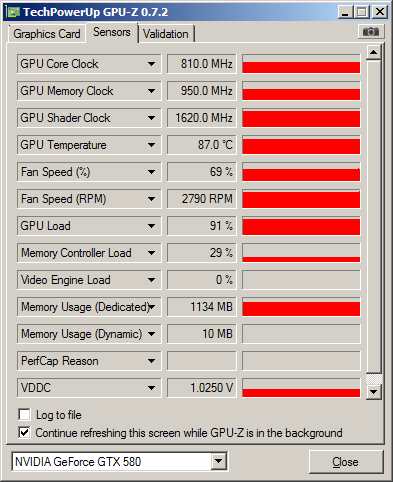

Chip, 87°C is too high. I suggest you cool your system/GPU better. | |

| ID: 31909 | Rating: 0 | rate:

| |

|

Seems I didn't get a Noelia 290px.. WU jet but the one's I got finish okay on my troublesome system. Even with the very recommended 310.90 driver Santi SR fail, but Noelia's steam on. They take a little longer that a few weeks back. | |

| ID: 31915 | Rating: 0 | rate:

| |

|

The last three days, I had 7 Noelia units crash. That's a lot for me, and so far, they crashed for everybody else, also. Looks like you have a problem here. | |

| ID: 31925 | Rating: 0 | rate:

| |

|

The same strings in stderr.txt: (0x3) - exit code 3 (0x3) SWAN : FATAL : Cuda driver error 999 in file 'swanlibnv2.cpp' in line 1574. Assertion failed: a, file swanlibnv2.cpp, line 59 Windows event: Log Name: Application Source: Application Error Date: 09.08.2013 17:39:07 Event ID: 1000 Task Category: (100) Level: Error Keywords: Classic User: N/A Computer: ****** Description: Faulting application name: acemd.2865P.exe, version: 0.0.0.0, time stamp: 0x511b9dc5 Faulting module name: acemd.2865P.exe, version: 0.0.0.0, time stamp: 0x511b9dc5 Exception code: 0x40000015 Fault offset: 0x00015ad1 Faulting process id: 0x11c8 Faulting application start time: 0x01ce950e28118082 Faulting application path: C:\ProgramData\BOINC\projects\www.gpugrid.net\acemd.2865P.exe Faulting module path: C:\ProgramData\BOINC\projects\www.gpugrid.net\acemd.2865P.exe Report Id: 71992cef-0101-11e3-8cc4-8c89a52b18d0 | |

| ID: 31927 | Rating: 0 | rate:

| |

|

Wow the longest unit i ever had o.O | |

| ID: 31945 | Rating: 0 | rate:

| |

|

Oh, a "normal" Computingerror on KLEBEs can hang a GPU too. Thats new for me as experience with my equipment thats nearly resistent about the most problems here. Computer reset -> works as usually ^^ | |

| ID: 31950 | Rating: 0 | rate:

| |

Message boards : Number crunching : long running 290px..-Noelia_KLEBE WUs